PRESENTED BY

Cyber AI Chronicle

By Simon Ganiere · 31st March 2024

Welcome back!

Project Overwatch is a cutting-edge newsletter at the intersection of cybersecurity, AI, technology, and resilience, designed to navigate the complexities of our rapidly evolving digital landscape. It delivers insightful analysis and actionable intelligence, empowering you to stay ahead in a world where staying informed is not just an option, but a necessity.

Table of Contents

What I learned this week

TL;DR

Never thought that killing that Netflix subscription at Christmas will actually lead to start Project Overwatch! I created this newsletter 3 months ago as a way to keep me honest with my learning. From where i’m sitting, it’s 100% working! Truly appreciate all of the feedback and encouragement i’m receiving! and obviously keep them coming!

An attentive reader pinged me on the back of last week newsletter. Highlight one more additional nasty scenario that can occur with API. Basically saying you can embed malicious prompt into API request so it’s being “executed” once that data is retrieved. Very much aligned to the ComPromptMized scenario.

AI workload is coming under attack. Not a big surprise if I’m being honest. Encourage you to check the article on Ray vulnerability…and well if you are using Ray…you know what you have to do!

Unpacking AI Risk & Governance - Episode #1

We have already talked about quite a few risks and governance related topics in the last few weeks from API, to third-party risk, regulation, and adversarial machine learning. I’m still trying to get my head around how to structure all of those topics (and all the ones I have touched on) as more or less every day there are new publications, new threats, new white papers, etc. and obviously a fair amount of snake oil from vendors (no offence against anyone 😄).

Obviously not trying to be more clever than some of the big institutions like NIST or MITRE ATLAS just trying to simplify some of the key concepts so it can be understood without the need for hardcore technical knowledge. I believe is here to change the world and anyone involved in risk management must understand the key aspect as soon as possible.

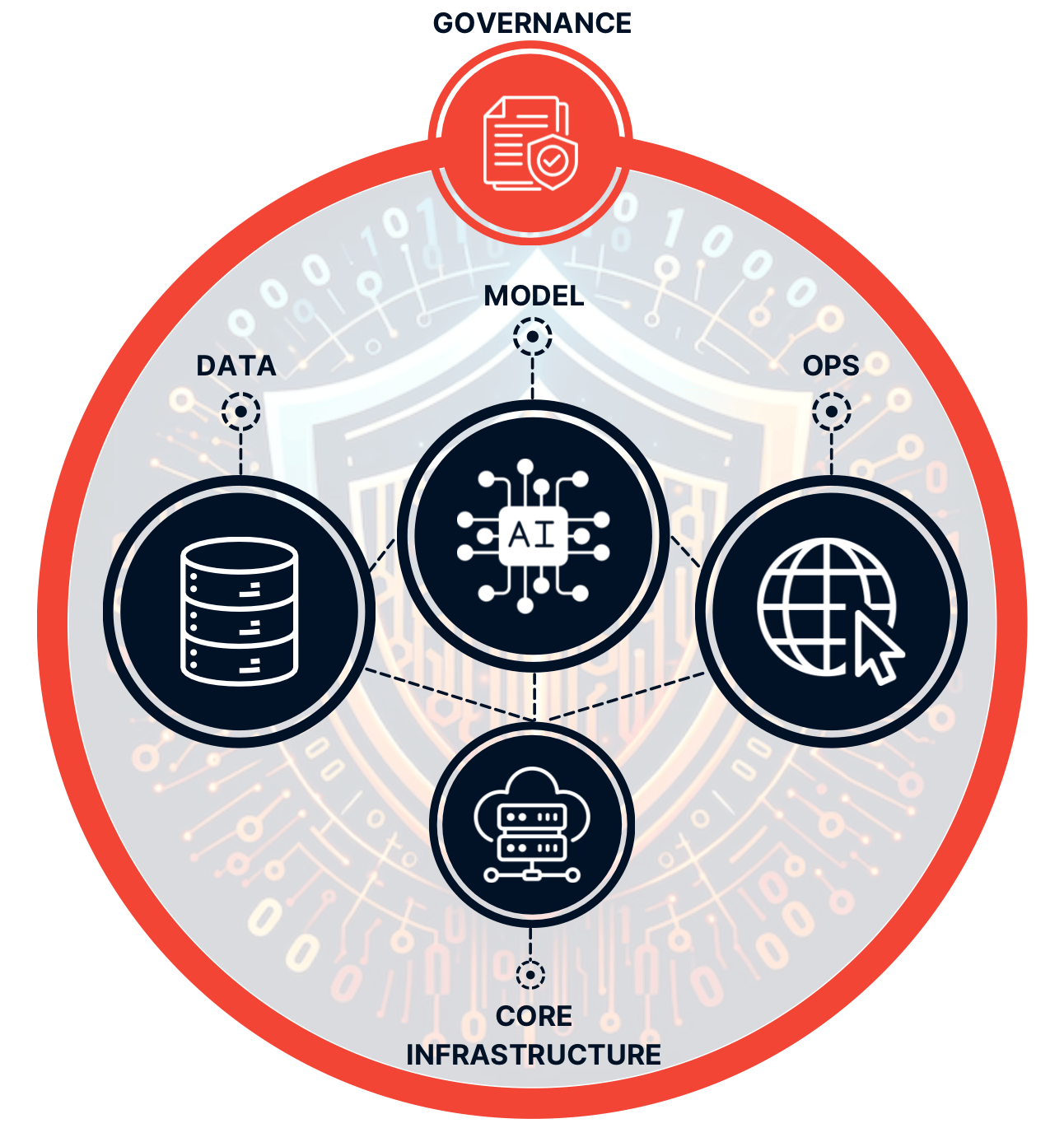

So here is how i’m trying to think about all this:

The underlying component is the overall governance around AI within your company. The objective here is to handle the overall risk management framework for the entire usage of AI. From policies, processes, stakeholder management and organisation structure to drive the AI. The other part of this, is to really drive the understanding of the risk and the threat. This is probably one of the most important part. The risk management or structure is not unknown, it can be leveraged from existing risk management setup for cyber (or other). The threat and the risk though needs to be fully mapped for your organisation.

Data is the backbone of your AI applications - no data or bad data equals no AI or bad AI applications! Data security has always been difficult and the AI era is just going to make this even more apparent and important. To adopt AI you have to feed it your enterprise data and most of the time getting control over the entire set of structured and unstructured data in a company is a huge challenge. As data is the backbone of AI, any issue (even if minor) in the data can have a huge impact - this is the butterfly effect at scale.

The core function of an AI application is the actual model. Building that model is a key step of course. This is where your machine engineering and data scientists teams are enjoying themself the most! This is, however, another key step as you need to ensure you have chosen the right technology, algorithm and most importantly ensure the trustworthiness of your model. This is where the model safety, robustness, validity and explainability is being defined and validated.

Once you have your data and your model all line up, you need to deploy and operate it. You can say, yet another app to deploy and operate, however, there are a couple of key items that need to be taken into consideration here. From your AI CI/CD pipeline, maintenance and IT operation (e.g. performance, etc.) and its security from vulnerability management of your AI, to red teaming, security monitoring and incident response plan, etc.

The core infrastructure is obviously relevant as well. The point here is you need to ensure you do the basic right. It’s not because you are working on an AI application that you should neglect your core infrastructure and bypass all of the normal controls and governance you have in your environment.

Conclusion

In the next few week, I will expand on those key areas: Governance, Data, Model and Ops. Again not trying to reinvent the wheel here as there are many great frameworks and white papers but there are a couple of key items that are worth zooming into in my opinion.

Thinking out load here, and assuming a company wants to fully embrace AI for their future growth it will require a significant shift in how things are handled today. The winners of the day? Data security and API. Without those you are taking a significant amount of risk. But there is also potentially something much bigger at play here. Something that can fully switch how we are currently governing application and this will have consequences on how we think about security.

Worth a full read

ShadowRay: First Known Attack Campaign Targeting AI Workloads

Key Takeaway

Oligo research team discovered an active attack on Ray servers exploiting a critical, disputed vulnerability.

Ray is a unified framework for scaling AI and Python applications for a variety of purposes. Ray is being used by multiple key players in the industry including OpenAI, Uber, Spotify, etc.

The vulnerability, CVE-2023-48022, allows unauthorized job execution on Ray, affecting thousands of servers. The CVE is disputed and therefore mostly remain unpatched.

Attackers exploited this flaw for 7 months, targeting sectors like education, cryptocurrency, and biopharma.

Compromised servers leaked sensitive data including AI models, environment variables, and production credentials.

Attackers monetized their access through cryptocurrency mining using the computing power of compromised GPUs.

The lack of authorization in Ray's Jobs API is a significant security oversight, exploited in these attacks.

The total value of compromised computing resources is estimated to be almost a billion USD.

Oligo's research emphasizes the need for runtime monitoring and secure deployment practices for Ray users.

Key AI Trends and Risks in the ThreatLabz 2024 AI Security Report

Key Takeaway

AI/ML tool usage surged by nearly 600%, with enterprises blocking a significant portion due to security concerns.

Enterprises are sending significant volumes of data to AI tools, with a total of 569 TB exchanged between AI/ML applications between September 2023 and January 2024.

Manufacturing, finance, and services lead in AI/ML transactions, with ChatGPT being the most used and blocked application.

The healthcare sector cautiously adopts AI, focusing on diagnostics and patient care while managing data privacy risks.

Financial services aggressively integrate AI for customer service and operational efficiency, mindful of regulatory compliance.

Government entities explore AI for public services, balancing innovation with the need for robust regulatory frameworks.

The manufacturing industry leverages AI for predictive maintenance and supply chain optimization, prioritizing data security.

Education sector's low AI transaction blocking rate suggests an embrace of AI as a learning tool, despite potential data privacy issues.

ChatGPT's adoption outpaces overall AI growth, indicating its central role in enterprise AI strategies across industries.

Regional analysis shows the US and India as leading contributors to global AI transactions, reflecting their tech innovation focus.

The report underscores the dual role of AI in enhancing cybersecurity defenses and posing new, sophisticated cyber threats.

Some more reading

U.S. Department of the Treasury published an interesting document: Managing Artificial Intelligence-Specific Cybersecurity Risks in the Financial Services Sector » READ

Scaler published their Key AI Trends report for 2024 » READ

OpenAI explained their approach on synthetic voice…said differently how to create a deepfake of your voice with only 15 seconds of data…I get it there are valid use-cases but security wise the “our partner agrees to our policies” do not sounds very encouraging » READ

Microsoft’s new safety system can catch hallucinations in its customers’ AI apps » READ

Apple CPU encryption hack - GoFetch » READ

Another great piece of work from Brian Krebs: Recent ‘MFA Bombing’ Attacks Targeting Apple Users » READ

Daniel Miesseler latest post on the “Efficient Security Principle (ESP) is one of the gold nugget that make you think hard about core security principle » READ

AI hallucinates software packages and devs download them – even if potentially poisoned with malware…or when people are already blindly trusting AI output » READ

Wisdom of the week

The first rule of handling conflict is don’t hang around people who are constantly engaging in conflict.

Contact

Let me know if you have any feedback or any topics you want me to cover. You can ping me on LinkedIn or on Twitter/X. I’ll do my best to reply promptly!

Thanks! see you next week! Simon