PRESENTED BY

Cyber AI Chronicle

By Simon Ganiere · 7th April 2024

Welcome back!

Project Overwatch is a cutting-edge newsletter at the intersection of cybersecurity, AI, technology, and resilience, designed to navigate the complexities of our rapidly evolving digital landscape. It delivers insightful analysis and actionable intelligence, empowering you to stay ahead in a world where staying informed is not just an option, but a necessity.

Table of Contents

What I learned this week

TL;DR

I posted a more “opinion” piece this week on the back of some reading and posts on LinkedIn. All about the paradox of why we are still seeing a daily occurrence of data breaches even if there are billions of dollars of investment. You can read it here.

Microsoft's "Stargate" project, a $100 billion investment in a supercomputing cluster for OpenAI, could redefine AI development and geopolitical tech dynamics. The cost aspect of this is very interesting. If you got to spend $100 billion to get to the next level of AI (AGI?) that will seriously limit who can do this. Basic economics might get in the way. Full AI adoption will only happen if it's worth it economically. Someone will need to sell a lot of subscription or API usage to make some money out of this investment.

Microsoft is back in the news with the CSRB report. Pretty damning report if you ask me! This has to raise a lot of questions from any CISO in companies that rely on Microsoft (which is more or less every company). Not saying everybody should back away from Microsoft Azure and O365, but this situation has to raise questions on the systemic risk related to Microsoft and other cloud service providers.

Still working on my RAG project. I now have a working API servers that I can use to run customer queries (fully inspired by Fabric). Leveraging the JSON output from the OpenAI API, so I can integrate it in a basic website to keep track of the prompts, source content and response. I need to check the latest tools from Anthropic. More to come on that.

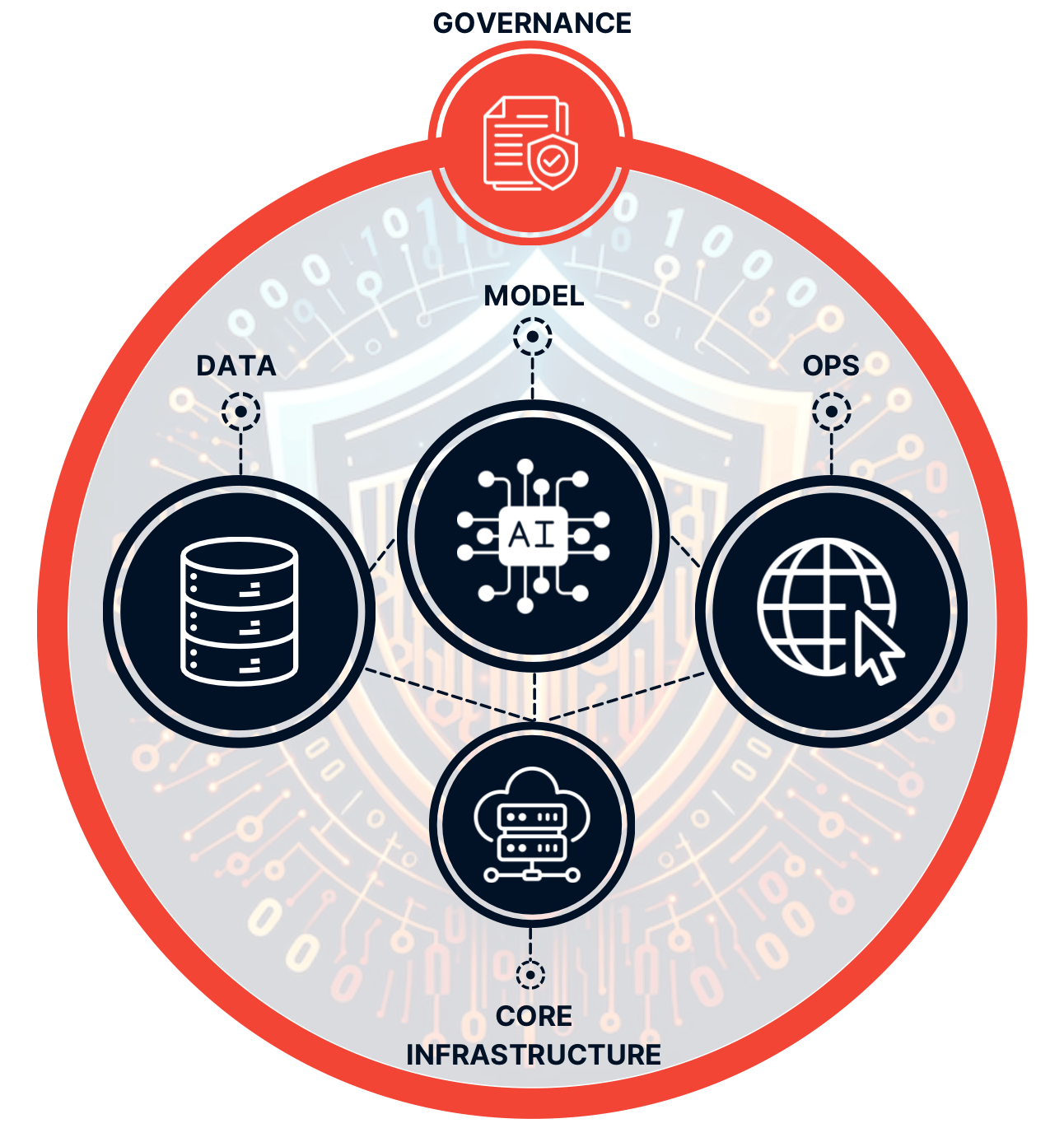

How to Tackle AI Threats with Effective Governance?

Following up on last week introduction of my home-made AI risk management approach. I’m continuing this week by zooming into the Governance topic. There are obviously a lot of literature on the topic, so i’m not going to cover everything related to governance. I want to focus on the threat and risk assessment part as I don’t understand how you can assess risk without understand the threat first!

There are many ways to do risk assessment and an equal number of frameworks. The vast majority of them use a similar structure:

Identify: what are we up against?

Relevance: what is relevant for us?

Prioritise: what is our priority?

Mitigate: what can we do about it?

Monitor / Improve: how do we ensure the risk is not coming back?

Last but not least, you can do this from a qualitative (e.g. expert opinion) or quantitative perspective (e.g. data driven). Based on this you can choose your approach, you can go full-blown details…or you can keep it simple. Remember one thing though, small progress is progress! You can try to build the perfect model that covers all threats, all risks, all controls, all metrics…or you can go step by step as any level of progress is helping you to make better risk management decisions. So keep it simple!

Identify

Input

List of threats

What are the threats around AI system? You can start simply here and leverage an existing list of threats. First one that comes to mind is the OWASP LLM Top 10 list. The OWASP list is a starting point, it will obviously depend on the full scope of your review. If the review is aimed at assessing model then this is a great list to start with. If the review is across an end-to-end assessment of an entire business process (including IT) then obviously the list might need some addition.

Output

A table with threat description, including a high-level documentation of the cause, impact and potential controls.

Relevance

Input

Your business requirements or use-cases.

Jumping on the last sentence above, the relevance discussion is really about understanding your business objectives. That context is super important! Without the business context, this is just a technical review that will help…well the technical team which is great but ultimately you need to protect the business value generated by that AI model.

The relevance discussion is also about the threat. Some of the threat might not be applicable. You might choose to leverage an AI as-a-service model from a third party or go full in-house. This will, of course, influence what is relevant or not.

Output

An update table of the threat with additional context and ideally a system diagram that show how your AI application is going to work

Prioritise

Input

Information about how the threat scenario will play out including threat actor and impact.

At this stage we should have a good understanding of what threat relevant within your context. We should now put the story together! That step is probably a bit longer as well. Now that you have your context you can create a full list of relevant threat scenarios that match your particular setup. You can then build a second version of that table that includes key elements for each threat:

Scenario & threat agent/actor: what is the skill level, the motivation, the opportunity, the methods and the size

Dependencies: what are the dependencies for the scenario to occur? Ease of exploitation (are we talking nation state level or basic)? Awareness of the vulnerability (is it public?)

Impact analysis: cover all of the possible impact from technical, to business, financial, reputation and compliance.

Now come the part where we can start to quantify the whole assessment. Again these steps can be over complicated but you can also keep it simpler. Here we can follow the OWASP Risk Rating Methodology for example. Which will gave ultimately a view on the different factors of the scenarios and ultimately give you a rating in term of risk. Again this methodology is pretty simple but it’s efficient at giving you a list of priority.

Output:

A table with a list of threat, mapped to the scenarios, dependencies and impact analysis.

A risk severity score for each scenario, ultimately leading to a priority list.

Mitigate

Input

The threat scenario priority list so you can focus on mitigating the right threats

Now that you know what is a priority in terms of risk, you can review in detail what controls you need to either implement or update in order to mitigate your risks.

Output

List of control improvement, ultimately leading to a project to deliver those improvements.

Monitor / Improve

Input

Threat scenarios and Control status

No system is static. The external threat landscape will adapt and create new ways of performing attacks. The internal threat landscape will change as well. Your company priorities and technology stack will continue to change (e.g. new applications, technology debt, resource challenges, regulatory requirements, etc.). There is a need for a strong monitoring of those parameters so you can continuously ensure you are on top of the external and internal threat landscape.

Output

Intelligence on the internal and external threat landscape.

Plans to adapt the controls as necessary.

Conclusion

Yes that was a lot even though I was trying to simplify this to the maximum!

Those risk assessments are the key first steps in the governance process. You need to know what you are up against and your control environment. There are other steps at the governance level including some other basic component that should not be skipped (in no particular order and not an exhaustive list):

AI Committee to review and make those decisions. This forum cannot be just a technical meeting seating in your technology team. It has to involve the business and the data owners.

AI system inventory. This is basic but often overlooked due to multiple factors. Not knowing what AI systems you have is big problem (very similar to the traditional cyber problem of not knowing what asset you need to protect). Do note this is an EU AI Act requirement.

AI system risk rating. You need an overarching set of rules to define which AI system represent too much risk. You might want to decide that AI system to cover specific use-cases should not exist in your company. This is very much aligned to the EU AI Act requirement, just at a company level.

I will try to work on a checklist and example of the above analysis to illustrate this even further. More to come!

Worth a full read

Cyber Safety Review Board Releases Report on Microsoft Online Exchange Incident from Summer 2023 | CISA

Key Takeaway

Microsoft's security culture was deemed inadequate due to a series of avoidable errors and a lack of rigorous risk management.

The intrusion was preventable; Microsoft's failure to update its security practices allowed the breach to succeed.

Microsoft did not detect the key compromise on its own, relying instead on an alert from the State Department.

Microsoft's public communications about the incident contained significant inaccuracies and were not timely corrected.

Recommendations include Microsoft focusing on security culture, deprioritizing feature developments for security improvements, and CSPs adopting modern control mechanisms for identity systems.

CSPs should provide default audit logging as a core service without additional charges to enhance intrusion detection and investigation.

A quote to finish: “Throughout this review, the Board identified a series of Microsoft operational and strategic decisions that collectively

point to a corporate culture that deprioritized both enterprise security investments and rigorous risk management”

Architecture risks leading to potential compromise of AI-as-a-Service providers

Key Takeaway

Wiz Research discovered architecture vulnerabilities in AI-as-a-Service providers, risking customer data.

Collaboration with Hugging Face led to mitigations against these security risks.

AI adoption's rapid pace necessitates mature security practices akin to those for public clouds.

Over 70% of cloud environments use AI services, highlighting the significance of these findings.

Malicious models can compromise AI systems, allowing attackers cross-tenant access.

Shared inference infrastructure and CI/CD pipelines are major risk points for AI-as-a-Service platforms.

Different AI/ML applications require distinct security considerations for models, applications, and infrastructure.

Hugging Face's mission includes democratizing machine learning and maintaining awareness of AI/ML risks.

Wiz's research emphasizes the importance of isolation in preventing malicious cross-tenant access.

Recommendations include sandboxing untrusted AI models and enhancing platform integrity through secure practices.

Some more reading

The XZ backdoor story with a WIZ summary » READ and full timeline (and oh boy that is a long timeline) » READ

Microsoft announced new tools in Azure AI to help build more secure and trustworthy generative AI applications » READ

The Modern Security Podcast: How Github’s Chief Security Officer blends security & engineering (YouTube video) » READ

A review of zero-day in-the-wild exploits in 2023 (Google / Mandiant) » READ

A beginner’s Guide to Tracking Malware Infrastructure » READ

Fake Facebook MidJourney AI page promoted malware to 1.2 million people » READ

‘The machine did it coldly’: Israel used AI to identify 37,000 Hamas targets » READ

CyberReason Ransomware: the true cost to business » Direct link to PDF

Wisdom of the week

😛

Contact

Let me know if you have any feedback or any topics you want me to cover. You can ping me on LinkedIn or on Twitter/X. I’ll do my best to reply promptly!

Thanks! see you next week! Simon