PRESENTED BY

Cyber AI Chronicle

By Simon Ganiere · 27th October 2024

Welcome back!

Project Overwatch is a cutting-edge newsletter at the intersection of cybersecurity, AI, technology, and resilience, designed to navigate the complexities of our rapidly evolving digital landscape. It delivers insightful analysis and actionable intelligence, empowering you to stay ahead in a world where staying informed is not just an option, but a necessity.

Table of Contents

What I learned this week

TL;DR

Last week we went through the basic of AI programming assistant and how they have evolved and their objectives to improve the productivity of developers. This week we are focusing on the security aspect! At this stage it seems that there is still a lot of CISO / security team needs to know and do to ensure those AI programming assistants are not bringing vulnerabilities in the code base. » READ MORE

Anthropic released an updated version of Claude 3.5 Sonnet and a new model (Claude 3.5 Haiku). The upgraded version of Sonnet brings across-the-board improvement with particularly significant gains in coding. Now the real big feature is in public beta (via API) and is called computer use. Claude will be able to directly use your computer - looking at a screen, moving a cursor, clicking buttons and typing text….if that sounds familiar, it’s because it looks like an enhance version of robotic process automation (RPA) but with an LLM on top so you just ask a question and watch the LLM doing it. And of course, people are already finding a way to misuse it.

Microsoft also announced a new autonomous agent capabilities with Copilot Studio. Those agents are called autonomous because they can automatically respond to signals across your business and initiate tasks. They can be configured to react to events or trigger without human input that instead originates from various tools, systems and databases, or are even scheduled to run hourly, daily, weekly or monthly.

The SEC fines four companies for “misleading cyber disclosures”. This is related to the SolarWinds incidents and the disclosures of 4 companies…total fined $7 million. Don’t think that amount is going to have a significant impact but it’s a strong signal by the SEC. LinkedIn also got hit by $310 million fine by the EU privacy regulators.

In the good news of cyber:

Apple releases a long documentation on the security of their Private Cloud Compute. This includes a bug bounty program for up to $1 million!

A new security feature, named “memory sealing”, has been released in the Linux kernel’s 6.10 release. Great blog post that provides more information and details (warning technical content)

Volexity released a great research paper about EDR evasion techniques.

AI Programming Assistants: What CISOs Should Know

This is the second article of a series of two articles related to AI Programming assistant. This first article focus on what is AI programming assistant.

AI programming assistants have rapidly integrated into software development workflows, offering developers unprecedented support in writing and debugging code. However, while these tools provide valuable benefits, they also introduce significant security risks that CISOs and security teams must address. As AI-generated code becomes more prevalent, the responsibility of ensuring that this code is secure and reliable falls squarely on the shoulders of organizations.

We'll explore the security concerns that come with AI programming assistants, and outline strategies that CISOs can adopt to mitigate these risks and safeguard their organization's software development processes.

The Security Risks of AI Programming Assistants

While AI coding tools like GitHub Copilot or Tabnine help developers accelerate code production, they are far from perfect. These tools have been shown to generate insecure code with surprising frequency. According to a recent report, 91.6% of developers surveyed admitted that AI programming assistants sometimes or frequently suggest insecure code. This can include the use of deprecated libraries, poor encryption practices, or other vulnerabilities that can lead to significant security issues.

Let's delve into some specific security risks:

Insecure Code Generation

AI tools are often trained on massive amounts of public code, which can include both good and bad practices. This makes them prone to suggesting insecure code snippets. For example, in a Stanford study, AI tools frequently suggested outdated encryption methods like MD5, which are no longer considered secure. Developers, particularly those without extensive security training, might assume that the AI's suggestions are reliable, leading to vulnerabilities being introduced into the software.

Hallucinations and Non-Existent Functions

Another common issue with AI-generated code is hallucination. This occurs when an AI tool suggests methods or packages that don't actually exist, often based on its training data. Developers who don't verify these suggestions might end up incorporating these hallucinated elements into their codebase, which could lead to security risks if attackers exploit these gaps. For instance, a package hallucination could lead to a package confusion attack, where attackers introduce malicious libraries that the developer inadvertently integrates into the project.

New Attack Vectors

AI programming assistants can introduce entirely new attack vectors. For instance, adversaries could manipulate an AI tool by injecting malicious prompts (prompt injection) or tampering with the training data (data poisoning). This could result in the AI generating compromised code that opens backdoors or leaks sensitive information. Attackers may also exploit AI assistants to recommend unsafe libraries, exacerbating software supply chain vulnerabilities.

For example, an attacker could potentially inject malicious code into public repositories that are likely to be used for training AI models. If successful, this could lead to the AI suggesting compromised code snippets to unsuspecting developers.

Recommendations for CISOs

Given the risks, CISOs must develop strategies to secure AI-assisted development environments. While AI coding assistants are powerful tools, they require robust oversight and careful management to prevent security breaches.

Here are key strategies CISOs should consider implementing:

Enforce Human In The Loop (HITL)

Despite the advanced capabilities of AI programming assistants, human oversight remains critical. AI-generated code should never be deployed directly into production without review. Developers and security teams need to manually audit code suggestions for vulnerabilities or outdated practices. Moreover, it is essential to encourage developers not to rely too heavily on AI suggestions without critical evaluation.

Implement pair programming practices when working with AI-generated code. This can help catch potential issues that might be missed by a single developer. Additionally, establish a robust code review process specifically tailored for AI-assisted development, where reviewers are trained to look for common AI-generated vulnerabilities.

Automate Security Scans

One of the best ways to mitigate risks is by integrating automated security scans into the development pipeline. AI-generated code, just like human-written code, should pass through comprehensive security testing, including static application security testing (SAST), dynamic application security testing (DAST), and software composition analysis (SCA). These tools can detect vulnerabilities, insecure dependencies, and other issues before the code reaches production.

According to a 2024 DevSecOps report, while 85% of organizations claim to have some measures in place for securing AI-generated code, less than 25% are confident in their policies. This confidence gap underscores the need for more effective and automated security practices.

Integrate these security scans into your CI/CD pipelines to ensure that every piece of code, whether human-written or AI-generated, is thoroughly checked before deployment. Consider developing custom security rules that specifically target common vulnerabilities in AI-generated code, such as the use of deprecated functions or insecure API calls.

Perform Risk Assessments

Before adopting any AI programming assistant, CISOs should conduct a thorough risk assessment. This includes evaluating the security posture of the AI tool provider, understanding how the tool handles sensitive data, and ensuring that no sensitive code or information is unintentionally shared with third parties. Cloud-based AI services, in particular, should be scrutinized for how they manage user data, including whether inputs are used for further training or are vulnerable to leaks.

Additionally, security policies should be put in place to govern the use of AI tools within the organization. Shadow IT can pose a significant risk. Establishing clear guidelines about which AI tools can be used and under what conditions can help prevent the uncontrolled adoption of insecure solutions.

Train Developers on Secure Coding Practices

One of the key issues with AI-generated code is the false sense of security it can give to developers. Many developers assume that because the code is generated by AI, it must be secure. However, as numerous studies have shown, this is often not the case.

CISOs should ensure that developers receive regular training on secure coding practices and are educated about the limitations of AI programming assistants. Training should emphasize the importance of reviewing AI-generated code, testing for security vulnerabilities, and understanding when AI suggestions might be unsafe. This includes training on prompt engineering—crafting inputs that yield more reliable and secure AI outputs.

Develop a curriculum that teaches developers how to critically evaluate AI suggestions. This should include understanding the context in which the AI operates, recognizing common AI biases and mistakes, and knowing when to rely on human expertise over AI suggestions. Consider implementing a certification program for AI-assisted development to ensure all developers meet a baseline of competency.

Establish Clear Governance and Policies

Develop and enforce clear policies regarding the use of AI programming assistants. These policies should cover which tools are approved for use, how they should be configured, and what types of projects or code are suitable for AI assistance. Ensure these policies are regularly updated to keep pace with the rapidly evolving AI landscape.

Continuous Monitoring and Updating

As AI tools evolve rapidly, continuous monitoring and assessment are critical to maintaining secure development environments. Regular audits should be conducted to assess the effectiveness of security measures and identify areas for improvement. Organizations should adopt an iterative approach to security, where findings from audits, incident reports, and new threat intelligence are used to update security controls and policies. Each iteration should aim to strengthen defenses by learning from past incidents and refining detection and response strategies. This kind of adaptive learning is essential to building resilience against evolving threats.

Iterative security enhancements are also key in fostering resilience. Security teams must be equipped to regularly revisit and adjust their defensive strategies, adapting to the fast-paced evolution of AI tools and cyber threats. Whether through monthly vulnerability assessments or real-time feedback loops from security operations, organizations should strive for continuous improvement to mitigate emerging risks in AI-assisted development.

The Path Forward: Securing AI-Assisted Development

AI programming assistants are becoming increasingly prevalent, offering significant productivity benefits while also introducing complex security challenges. As AI’s role in software development continues to expand, the nature of cybersecurity must evolve in parallel. The future of AI-assisted development will likely see tighter integration between AI-driven development tools and AI-enhanced security systems. We can expect AI-powered security solutions to not only monitor and defend against emerging threats but also to predict vulnerabilities through advanced pattern recognition and proactive threat hunting.

Looking ahead, AI’s potential to autonomously detect and respond to security incidents will become more sophisticated. This shift towards AI-driven resilience, where AI itself is used to defend against AI-generated threats, will be a critical frontier for CISOs to explore. Continuous learning from both the tools and the environments in which they operate will allow security teams to better predict, prevent, and respond to the dynamic landscape of cyber threats.

Furthermore, the ethical and regulatory landscape surrounding AI will continue to evolve, with CISOs needing to anticipate potential legal and compliance challenges. Future-forward organizations will not only focus on today’s risks but also prepare for the implications of AI’s continued integration into critical systems, ensuring their security frameworks are resilient, adaptable, and forward-looking.

SPONSOR BY

Writer RAG tool: build production-ready RAG apps in minutes

RAG in just a few lines of code? We’ve launched a predefined RAG tool on our developer platform, making it easy to bring your data into a Knowledge Graph and interact with it with AI. With a single API call, writer LLMs will intelligently call the RAG tool to chat with your data.

Integrated into Writer’s full-stack platform, it eliminates the need for complex vendor RAG setups, making it quick to build scalable, highly accurate AI workflows just by passing a graph ID of your data as a parameter to your RAG tool.

Worth a full read

This AI Pioneer Thinks AI Is Dumber Than a Cat

Key Takeaway

Yann LeCun, a pioneer in AI, dismisses warnings about AI's existential threat.

Skepticism about AI's potential can provide a balanced perspective in the field.

Current AI models have limitations in mimicking human or even animal intelligence.

Fundamental research in AI, such as neural networks, has paved the way for powerful AI systems.

Disagreements among AI pioneers highlight the complexity and uncertainty in the field.

The hype around AI's development can overshadow its limitations and risks.

The belief in the imminent creation of AGI might be premature.

A shift in AI design, rather than scale, may be key to achieving AGI.

Large language models (LLMs) can give an illusion of intelligence without actual reasoning capabilities.

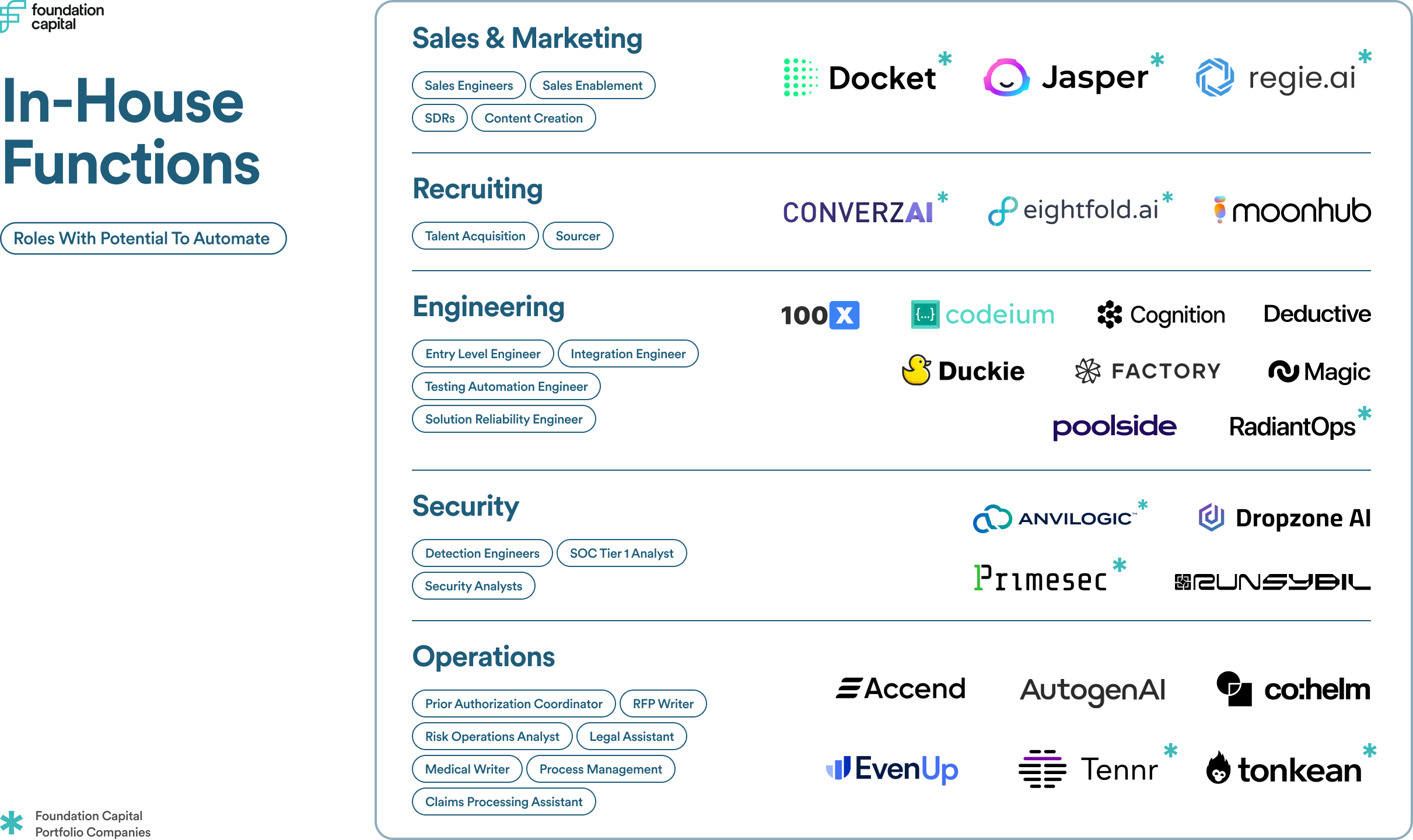

AI leads a service-as-software paradigm shift

Key Takeaway

AI is driving a paradigm shift from Software-as-a-Service to Service-as-Software.

The rise of AI is opening up a $4.6 trillion market opportunity.

AI's potential to automate complex tasks is disrupting traditional business models.

An outcome-oriented approach aligns software costs with business value.

The Service-as-Software shift applies to a wide range of business functions.

AI's ability to understand context and user intent is a major disruption factor.

The advent of AI-powered solutions doesn't necessarily mean the end of SaaS.

Service-as-software represents a transformative shift, with AI leading the way.

Services delivered as software are continuously learning and evolving.

The size of the opportunity for AI disruption is significantly larger than any single software company.

Research Paper

GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models

Summary: The paper investigates the mathematical reasoning capabilities of large language models (LLMs) using the GSM8K benchmark and introduces GSM-Symbolic, a new benchmark with symbolic templates to generate diverse question variants. The study reveals that LLMs show significant performance variance when responding to different instantiations of the same question, particularly when numerical values are altered. The performance of all models declines with increased question complexity, suggesting that current LLMs rely on pattern matching rather than genuine logical reasoning. The introduction of GSM-NoOp further exposes LLMs' inability to discern relevant information, as adding irrelevant clauses leads to substantial performance drops. The findings highlight the need for more reliable evaluation methodologies and further research into LLMs' reasoning capabilities.

Published: 2024-10-07T17:36:37Z

Authors: Iman Mirzadeh, Keivan Alizadeh, Hooman Shahrokhi, Oncel Tuzel, Samy Bengio, Mehrdad Farajtabar

Organizations: Apple

Findings:

LLMs show significant performance variance on different question instantiations.

Performance declines with increased question complexity.

LLMs rely on pattern matching, not genuine logical reasoning.

GSM-NoOp reveals LLMs' inability to discern relevant information.

Final Score: Grade: B, Explanation: Novel and empirical study, but lacks detailed statistical analysis and confidence intervals.

Wisdom of the week

The point of modern propaganda isn't only to misinform or push an agenda. It is to exhaust your critical thinking, to annihilate truth.

Contact

Let me know if you have any feedback or any topics you want me to cover. You can ping me on LinkedIn or on Twitter/X. I’ll do my best to reply promptly!

Thanks! see you next week! Simon