PRESENTED BY

Cyber AI Chronicle

By Simon Ganiere · 18th January 2026

Welcome back!

ServiceNow users faced a nightmare scenario this week when researchers uncovered a vulnerability that turned email addresses into master keys for complete system takeover.

The BodySnatcher flaw highlights a troubling reality: as AI agents gain more privileges within enterprise systems, simple authentication bugs can escalate into organization-wide breaches. Are we moving too fast with AI integration without properly securing the foundational systems that power them?

In today's AI recap:

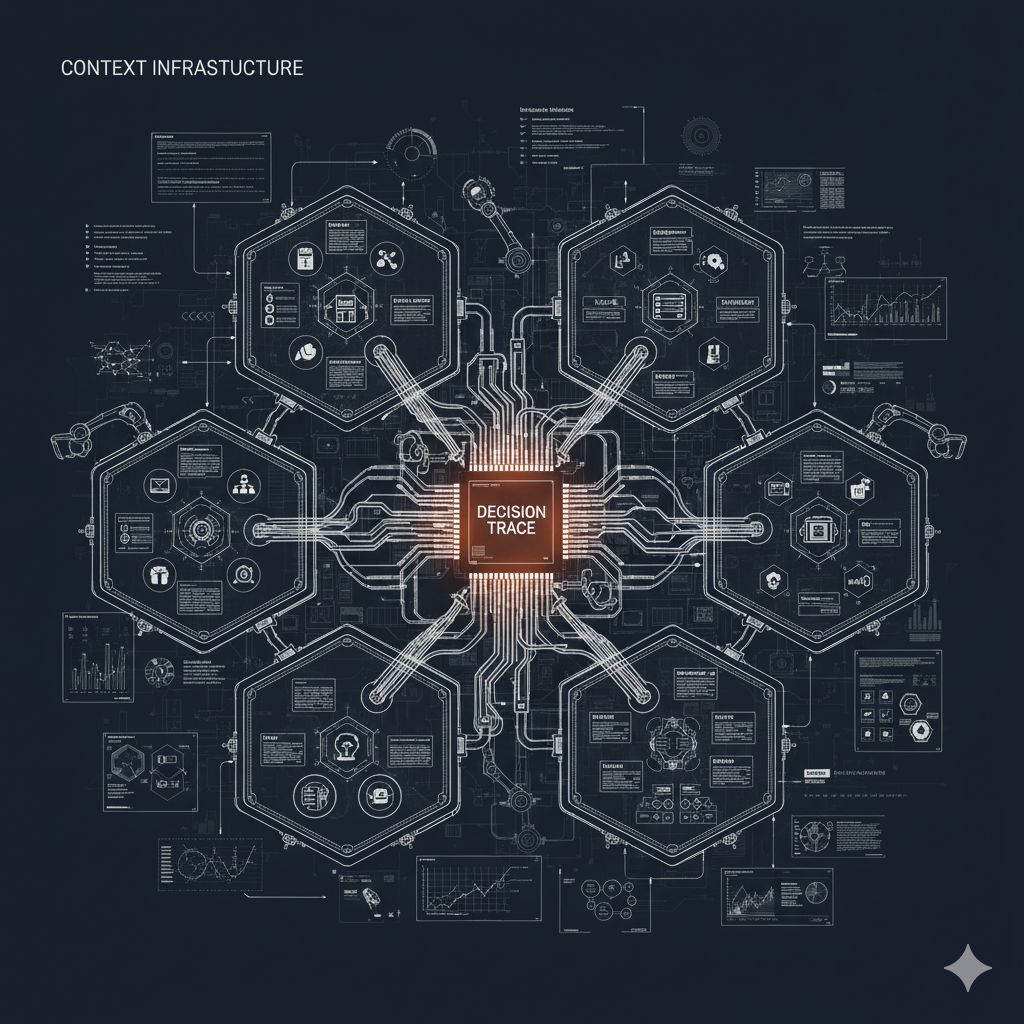

My work

I published a 2 part blog post about context graph, why it is important across the enterprise and also what it means from a security perspective and what security organisation can do. It’s a bit longer than the usual read as this topic requires a lot more introduction and context. Let me know what you think, I would love to chat about and if you like it feel free to re-post it and share it with your network/community.

The BodySnatcher in ServiceNow

What you need to know: A critical vulnerability in ServiceNow's AI platform, dubbed BodySnatcher, allowed attackers to impersonate any user with just an email address, bypassing MFA and SSO to hijack powerful AI agents. ServiceNow has since patched the flaw.

Why is it relevant?:

The vulnerability chained together a single, hardcoded platform-wide secret used for AI integrations with an auto-linking feature that only required an email address to confirm a user's identity.

Attackers could use this impersonation to execute privileged AI agents on the user's behalf, creating backdoor admin accounts and gaining full system access. ServiceNow has detailed the patched versions for affected customers.

This incident shows how agentic AI amplifies traditional flaws; a simple authentication bypass, tracked as CVE-2025-12420, became a critical system takeover risk because a powerful AI agent could be remotely controlled.

Bottom line: This vulnerability is a major wake-up call for securing AI integrations within enterprise platforms. Security teams must now scrutinize not just the AI models, but also the entire API and authentication framework supporting them to prevent simple bugs from becoming full-scale breaches.

AI-native CRM

“When I first opened Attio, I instantly got the feeling this was the next generation of CRM.”

— Margaret Shen, Head of GTM at Modal

Attio is the AI-native CRM for modern teams. With automatic enrichment, call intelligence, AI agents, flexible workflows and more, Attio works for any business and only takes minutes to set up.

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

Block's Trojan Goose

What you need to know: Block’s CISO shared how its red team successfully tricked the company’s internal AI agent, Goose, into executing an infostealer on an employee's laptop. The demonstration was part of a proactive security exercise to uncover AI-specific vulnerabilities.

Why is it relevant?:

The attack combined a phishing email with a prompt injection payload hidden inside invisible Unicode characters within a sharable workflow, tricking both the user and Block’s open source AI agent.

As a direct result, Block’s developers added new safeguards to Goose, including a “recipe install warning” that prompts users to confirm they trust a workflow’s source before execution.

Block is now exploring adversarial AI as a defense, experimenting with a second AI agent whose job is to check prompts and outputs for anything malicious before they are executed.

Bottom line: This real-world test proves that AI agents introduce a significant and novel attack surface into corporate environments. Organizations must begin red-teaming their own AI systems to understand and mitigate these new risks.

Microsoft Copilot's 'Reprompt' Attack

What you need to know: Security researchers at Varonis discovered a single-click attack method called Reprompt that could hijack Microsoft Copilot sessions, silently stealing user data and conversation history. Microsoft has since patched the vulnerability, which only affected Copilot Personal.

Why is it relevant?:

The attack began with a single click on a phishing link, which injected malicious instructions directly into Copilot through a URL parameter without the user's knowledge.

Attackers creatively bypassed security controls by instructing Copilot to perform actions twice, as the safeguards only applied to the initial request.

A “chain-request” technique enabled continuous data exfiltration by instructing Copilot to fetch new commands from an attacker's server, creating a persistent and stealthy communication channel.

Bottom line: This attack highlights how AI assistants create new, unexpected vectors for data theft that can sidestep traditional client-side security tools. As AI integrates deeper into our workflows, security teams must treat prompt injection and session hijacking as primary threats to user data and privacy.

North Korea's AI-Powered Ghost Workers

What you need to know: North Korean IT operatives are using stolen identities and AI to secure high-paying remote jobs at Western firms. This creates a significant insider threat, generating hundreds of millions for the regime by exploiting the remote work ecosystem.

Why is it relevant?:

The operation is a massive revenue generator, with estimates suggesting the remote worker and crypto-theft schemes funnel up to $600 million annually to the North Korean regime.

Operatives defeat identity verification using AI-driven deepfakes during video interviews, altering their image, voice, and even accent to match stolen credentials.

They use multi-layered proxy chains and domestic "laptop farms" to bypass existing controls, making their traffic appear as legitimate domestic remote work and defeating traditional geofencing.

Bottom line: The modern insider threat is no longer just a disgruntled employee, but a state-sponsored actor who is a trusted user from their first day. Verifying a remote worker's identity is not enough; security teams must now validate their physical location to mitigate this growing threat.

The Poisoned Model

What you need to know: Security researchers discovered that popular AI libraries from Nvidia, Salesforce, and Apple contain a critical flaw. This vulnerability allows an attacker to hide malicious code in a model’s metadata, which executes automatically when the model is loaded.

Why is it relevant?:

The core issue stems from Meta's Hydra configuration library, where a function intended to build objects from metadata could be tricked into running any arbitrary code.

This flaw creates a significant AI supply chain risk, as attackers can upload compromised models to public hubs like Hugging Face, where over 700 models use the vulnerable Nvidia format alone.

In response, the affected companies have issued patches, and Meta updated Hydra's documentation to warn developers about the potential for remote code execution.

Bottom line: This incident proves that even with "safe" model formats, the software that loads them is a critical new attack surface for security teams to monitor. It highlights the urgent need to scrutinize the entire MLOps toolchain, not just the final model artifact.

The Shortlist

PromptArmor revealed a file exfiltration attack in Anthropic's new Cowork tool, exploiting the same API vulnerability that researchers had previously reported in Claude Code and which the company had acknowledged but not fixed.

Check Point linked the GoBruteforcer botnet's success to the mass reuse of weak, default credentials found in AI-generated server deployment examples, which are now being exploited to target cryptocurrency and blockchain projects.

isVerified emerged from stealth with mobile apps that perform real-time, on-device detection of voice deepfakes during phone calls, aiming to counter AI-driven vishing attacks against enterprises.

PNNL unveiled ALOHA, an AI-based system that reconstructs cyberattacks from threat reports, allowing security teams to automatically generate and run adversary emulations to test defenses in hours instead of weeks.

Wisdom of the week

Because you are alive,

everything is possible.

AI Influence Level

Level 4 - Al Created, Human Basic Idea / The whole newsletter is generated via a n8n workflow based on publicly available RSS feeds. Human-in-the-loop to review the selected articles and subjects.

Reference: AI Influence Level from Daniel Miessler

Till next time!

Project Overwatch is a cutting-edge newsletter at the intersection of cybersecurity, AI, technology, and resilience, designed to navigate the complexities of our rapidly evolving digital landscape. It delivers insightful analysis and actionable intelligence, empowering you to stay ahead in a world where staying informed is not just an option, but a necessity.